Machine Learning for Healthcare Coding and Compliance Audits

In 2026, the focus on healthcare coding and compliance audit has reached new levels of intensity. According to a 2025 survey by Experian Health, 54 % of providers reported that claim errors are increasing and 41 % said their denial rate is 10 % or higher. Meanwhile, data from MDaudit–tracked audits show that the average at-risk amount per claim increased by 18 % year-over-year in 2025.

For revenue cycle managers, compliance officers, and HIM leaders, these trends signal a greater urgency: insufficient documentation, coding variation, or inconsistent audit readiness can now translate directly into denials, revenue loss, and regulatory exposure.

As clinical documentation becomes more complex, and payer audit programs expand their scope and intensity, organizations must build stronger workflows that support coding accuracy, documentation integrity, and audit defensibility without over-burdening staff or systems.

Key Takeaways

- Healthcare coding and compliance audit activity is increasing as payers intensify reviews and documentation standards continue to rise.

- Machine learning improves audit accuracy by interpreting clinical documentation consistently and identifying coding issues that manual review may overlook.

- ML supports audit readiness by detecting documentation-coding conflicts, ranking high-risk encounters, validating HCC conditions, and creating traceable evidence paths.

- ML adds value beyond traditional QA by monitoring shifts in coding behavior, highlighting gaps in evolving documentation, and helping teams focus on cases with the greatest audit exposure.

- Effective adoption requires a clear implementation framework that defines ML boundaries, integrates models at the documentation layer, establishes coder review pathways, and tracks audit-focused performance metrics.

- Enterprise platforms such as RapidClaims apply ML through NLP extraction, payer-aligned audit rules, traceability logs, and human-in-the-loop verification to support compliant and defensible coding operations.

- Real-world use cases include multi-day documentation review, modifier validation, E/M element checks, multi-specialty alignment, and attestation confirmation.

Table of Contents:

- Current State of Healthcare Coding and Compliance Audit Programs

- How Machine Learning Supports Healthcare Coding and Compliance Audit Accuracy

- Opportunities to Strengthen Healthcare Coding and Compliance Audit Workflows With ML

- Risks and Limitations of ML in Healthcare Coding and Compliance Audit Processes

- Implementation Framework for ML in Healthcare Coding and Compliance Audit Operations

- How RapidClaims Uses ML in Healthcare Coding and Compliance Audit Review

- Practical Use Cases of ML in Healthcare Coding and Compliance Audit Review

- Conclusion

- FAQs

Current State of Healthcare Coding and Compliance Audit Programs

As organizations move into 2026, coding and audit teams face widening gaps between documentation complexity, payer expectations, and available review capacity. This is reshaping the operational realities of healthcare coding and compliance audit functions.

Payers are increasing pre-payment and post-payment reviews that target coding accuracy, diagnosis validation, and documentation sufficiency. CMS has expanded Medicare Advantage scrutiny, and commercial plans continue to tighten audit criteria. These changes place more pressure on internal teams to ensure every code is supported, consistent, and defensible.

Common challenges include:

- Variation in ICD-10, CPT, and HCC code selection across coders

- Documentation gaps that affect medical necessity and audit outcomes

- Limited capacity to perform thorough internal audits at scale

- Increased payer requests for supplemental records and coding justification

- Rising administrative costs tied to denials, rework, and appeals

Manual chart review remains valuable, but teams struggle to keep pace with growing encounter volumes and more granular coding rules. As audit programs expand, organizations increasingly need workflows that support consistent interpretation of clinical text, early detection of coding issues, and stronger audit readiness.

How Machine Learning Supports Healthcare Coding and Compliance Audit Accuracy

Machine learning is becoming an operational tool for healthcare coding and compliance audit teams because it can interpret clinical content at the scale and precision required for modern review environments. Its value lies in analyzing patterns and relationships that are difficult for manual processes to detect.

ML contributes to coding and audit accuracy in several targeted ways:

Interpreting clinical language with consistency

ML and NLP models can evaluate physician notes, lab results, imaging summaries and embedded abbreviations to identify clinical indicators that support or contradict assigned codes. This generates more standardized interpretation across coders and reduces subjective variation in complex cases.

Detecting coding inconsistencies that trigger audit attention

Certain patterns increase the likelihood of a payer audit, such as:

- Diagnoses that appear without sufficient clinical indicators

- Procedure-to-diagnosis mismatches

- HCC conditions documented once but not linked across the encounter

- Code combinations that require explicit supporting documentation

ML systems can highlight these inconsistencies before claims are submitted, giving teams the opportunity to correct and document proactively.

Prioritizing encounters with higher audit exposure

ML can rank encounters based on factors associated with audit risk. Examples include inconsistent problem lists, missing chronic condition linkage, or abrupt changes in patient acuity. This prioritization allows internal auditors to focus on cases most likely to generate payer queries.

Strengthening validation for HCC and risk-adjusted claims

HCC v28 introduces more granular diagnostic groupings. ML tools can verify whether the documentation supports risk-adjusting conditions by checking linkage between symptoms, assessments and treatment plans. This helps reduce RAF discrepancies and downstream correction requests.

Providing traceability for audit defensibility

ML models can capture why specific documentation cues support a code recommendation. This creates an audit trail that helps compliance teams explain coding decisions during payer reviews, especially for conditions that require consistent longitudinal evidence.

Opportunities to Strengthen Healthcare Coding and Compliance Audit Workflows With ML

Machine learning opens specific operational opportunities that strengthen audit performance and coding reliability beyond what manual review or rule-based tools can achieve.

Identifying patterns that traditional QA processes miss

ML can analyze multi-encounter trends, such as recurring discrepancies for a provider or service line, which helps audit teams detect localized coding issues before they escalate into payer findings.

Monitoring real-time shifts in coding behavior

Because ML models track coding outputs continuously, they can flag unusual changes in code usage frequency. This helps organizations detect emerging risks such as upcoding patterns, incomplete chronic condition capture, or sudden deviations from payer-expected distributions.

Highlighting documentation elements that influence audit outcomes

ML can surface documentation elements that most strongly affect audit decisions. Examples include missing diagnostic qualifiers, incomplete interval histories, or notes that do not align with billed acuity levels. These insights guide targeted education for clinicians and coders.

Supporting concurrent review during active encounters

ML tools can evaluate evolving documentation while a patient visit is still open. This gives coders early visibility into documentation gaps that could create audit exposure and allows teams to request clarifications while the encounter is fresh.

Enhancing internal audit sampling strategies

Instead of relying on random or risk-tier sampling, ML can create optimized audit samples based on probability of error, financial exposure, or payer sensitivity. This improves the efficiency and precision of internal compliance audits.

Explore how ML can strengthen your coding accuracy and audit readiness. Request a working session with our team to evaluate where automation can support your RCM workflows.

Risks and Limitations of ML in Healthcare Coding and Compliance Audit Processes

While ML strengthens audit accuracy and coding integrity, it also introduces operational and compliance risks that organizations must manage carefully.

Variability in how models interpret clinical nuance

Clinical concepts are not always documented consistently. If models over-weight or under-weight subtle cues such as chronic condition qualifiers or time-based procedure details, they can introduce new errors that auditors must later reconcile. This is especially impactful for encounters with layered diagnoses or multi-disciplinary notes.

Risk of reinforcing provider-specific or historical documentation patterns

If training data reflects local habits or outdated coding practices, ML models may learn patterns that diverge from current payer expectations. This can create alignment issues during external audits, where documentation must meet national or contract-specific standards rather than historical internal norms.

Challenges mapping model outputs to payer-specific audit logic

Many payers apply proprietary validation rules. An ML system may flag an issue that a payer does not consider material, or fail to catch conditions that a particular payer scrutinizes closely. Without payer-aligned calibration, ML outputs can create both false confidence and unnecessary rework.

Limited transparency in cases involving multi-source documentation

When models synthesize data across physician notes, ancillary reports, and imported external records, it can be difficult for audit teams to trace how each data element contributed to a recommended code. This lack of clarity complicates audit defense because external reviewers expect a clear chain of documentation support.

Operational friction when ML recommendations conflict with coder judgement

Discrepancies between ML findings and human interpretation require resolution. If workflows do not clearly define how to adjudicate these conflicts, coding teams may experience delays, inconsistent decisions, or uneven application of edits across service lines.

Model degradation as documentation patterns evolve

Changes in templates, specialty-level documentation trends, or new clinical practice guidelines can reduce model accuracy over time. Without structured monitoring and periodic recalibration, ML-supported audit workflows may deteriorate quietly and produce incorrect signals.

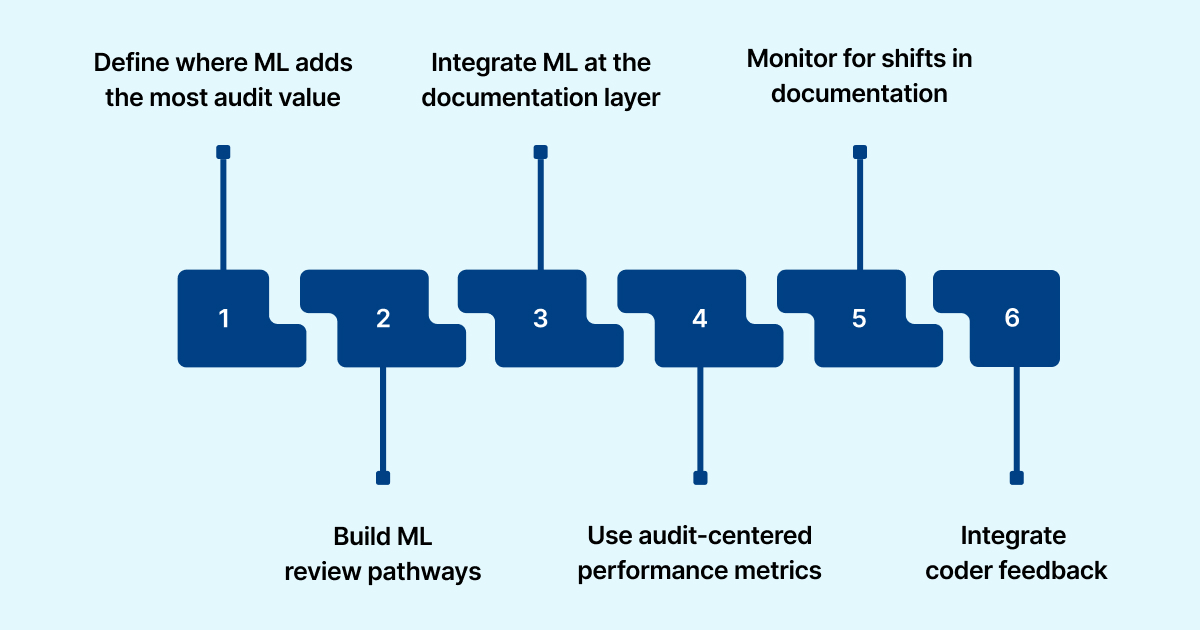

Implementation Framework for ML in Healthcare Coding and Compliance Audit Operations

Introducing machine learning into healthcare coding and compliance audit workflows requires a structured framework that protects accuracy, maintains audit defensibility, and aligns with RCM operational realities. Effective teams move beyond simple model deployment and establish governance that ensures ML outputs support compliant decision making.

- Define where ML adds the most audit value: Teams should target coding areas with predictable documentation patterns and high audit sensitivity. Examples include validation of chronic conditions, alignment between diagnoses and procedures, and verification of hierarchical models such as HCC.

- Create structured review pathways for ML recommendations: ML outputs must enter coder workflows with clear labels. Advisory prompts highlight potential documentation gaps, while actionable edits are reserved for cases where supporting evidence is strong. This preserves coder judgment and ensures transparent audit trails.

- Integrate ML at the documentation layer: Embedding ML within EHR or RCM documentation review ensures findings are based on the source record rather than the finalized claim. This enables earlier detection of issues and maintains traceability for audit review.

- Use audit-centered performance metrics: Model evaluation should focus on outcomes that affect audit reliability. Relevant metrics include alignment with payer audit findings, reduction in internal QA exceptions and stability of ML recommendations across different provider documentation styles.

- Monitor for shifts in documentation or coding patterns: Model behavior should be tracked for drift, such as changes in flag rates or declining confidence in specific code groups. Early detection helps teams recalibrate models before inaccuracies impact submitted claims.

- Incorporate coder feedback into model refinement: Coder validation is essential for compliance. Feedback loops allow coders to confirm or reject ML suggestions, supporting continuous improvement while keeping recommendations consistent with payer expectations.

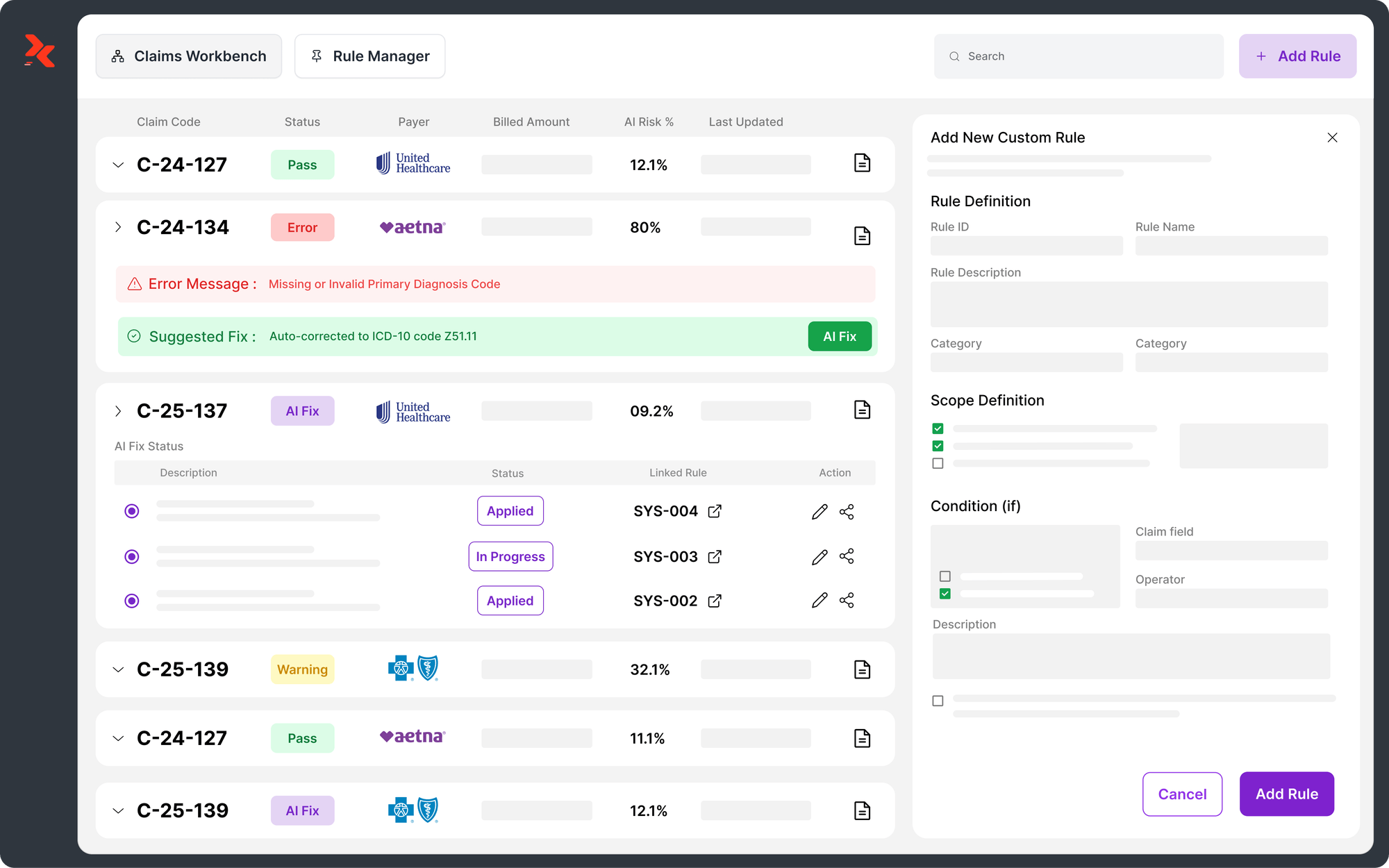

How RapidClaims Uses ML in Healthcare Coding and Compliance Audit Review

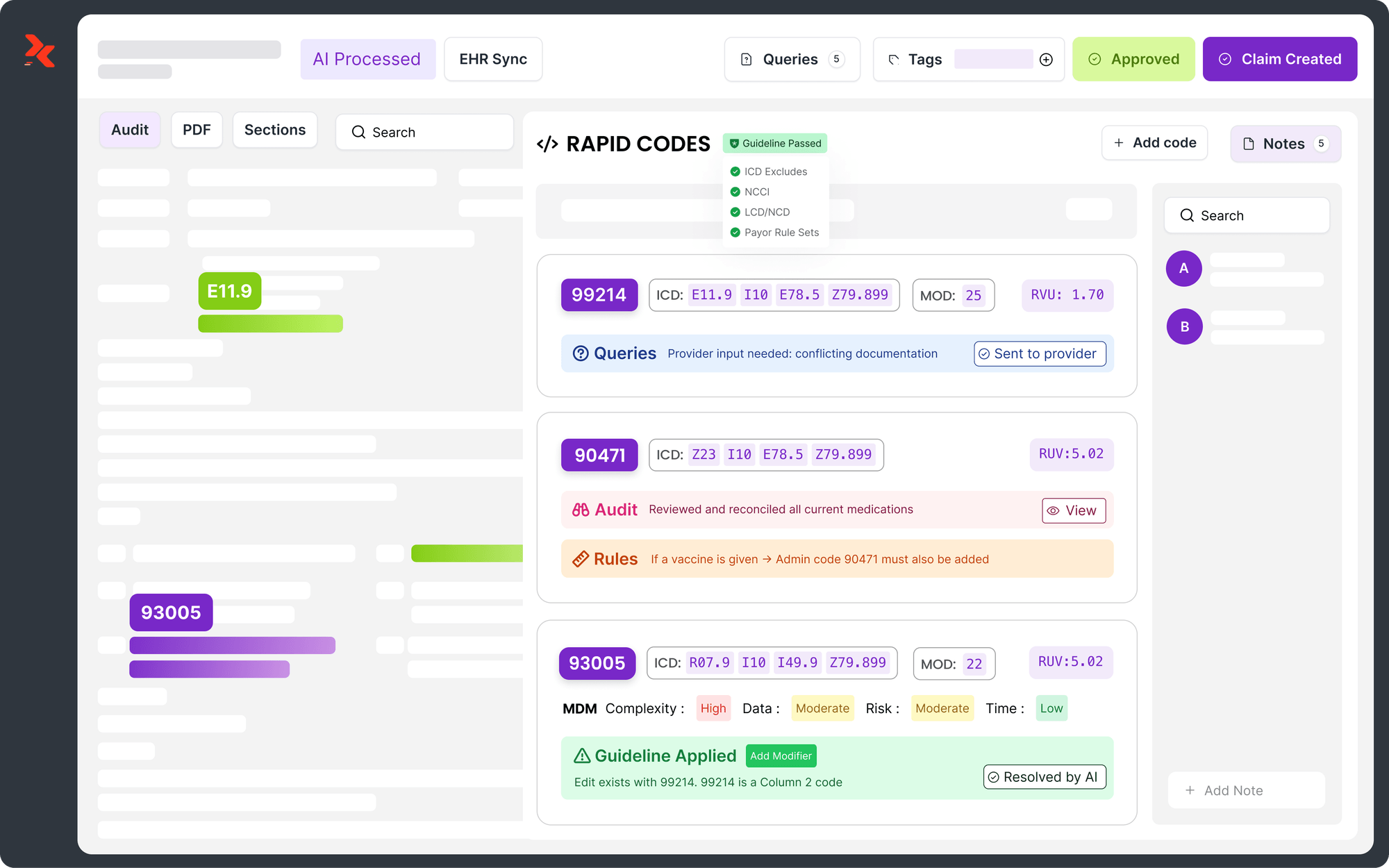

Platforms such as RapidClaims apply machine learning through focused, audit-ready components that strengthen healthcare coding and compliance audit workflows.

- NLP extraction: Captures diagnostic indicators, procedure attributes, and time-based qualifiers from clinical text.

- Audit-rule mapping: Aligns model outputs with payer validation requirements including HCC evidence checks, documentation sufficiency, and specialty-level criteria.

- Traceability: Links each suggested code to the exact documentation snippet and model confidence scores for audit defense.

- Human verification: Directs ML insights to coders or auditors who validate or override recommendations, creating structured feedback for continuous improvement.

- FHIR interoperability: Uses standardized exchange to pull complete encounter data so model decisions reflect the full clinical record.

These components form the technical foundation RapidClaims uses to support consistent coding accuracy and audit readiness.

If improving audit defensibility and reducing coding variation is a priority for 2025–2026, connect with a RapidClaims specialist to review your current workflows and identify high-impact opportunities.

Practical Use Cases of ML in Healthcare Coding and Compliance Audit Review

Machine learning enhances healthcare coding and compliance audit workflows by identifying documentation and coding issues that traditional review methods may overlook.

- Detecting gaps across multi-day encounters: ML tracks clinical details across consecutive visits and flags cases where documentation does not fully support ongoing diagnoses or service intensity.

- Interpreting nonstandard clinical phrasing: ML recognizes specialty-specific shorthand or implied diagnoses that may require documentation clarification before they create audit risk.

- Validating encounters that rely on modifier accuracy: ML identifies situations where pricing or coverage modifiers may be needed and checks whether supporting documentation is sufficient for payer review.

- Targeting missing elements that influence E/M code validity: ML highlights specific sections of the note where clinical reasoning, medication changes or risk factors are incomplete, guiding focused audit review.

- Reviewing multi-specialty documentation for coding alignment: ML consolidates input from different providers involved in an encounter and flags cases where combined documentation does not fully support the selected codes.

- Identifying encounters that require attestation confirmation: ML detects missing or inconsistent supervision or teaching physician attestations, helping compliance teams address issues before submission.

Conclusion

Machine learning is becoming a core part of how organizations strengthen healthcare coding and compliance audit processes. It supports consistent interpretation of clinical documentation, improves the precision of internal audit activities and helps teams focus on the encounters most likely to affect financial and compliance outcomes. As audit programs expand and documentation requirements deepen, ML offers a reliable way to reduce variation, improve defensibility and maintain coding integrity at scale.

Organizations that adopt structured ML frameworks and maintain strong human oversight will be better positioned to respond to payer scrutiny and protect revenue performance.

To explore how ML can support your coding and audit operations, you can request a demo of RapidClaims.

FAQs

Q: What is a healthcare coding and compliance audit?

A: A healthcare coding and compliance audit is a structured review that compares coded claims to underlying clinical documentation, payer policy and regulatory standards. It checks whether diagnosis and procedure codes are supported, modifiers are used correctly, and claims align with medical necessity and audit readiness frameworks.

Q: How often should organizations perform coding and compliance audits?

A: Industry best practice suggests a baseline internal audit at least annually, with higher-risk service lines (e.g., specialties, HCC risk adjustment) audited quarterly and proactive reviews conducted concurrently or pre-bill when possible.

Q: What triggers an external audit by payers or regulators?

A: Triggers include sudden spikes in specific code usage or high-level E/M codes, inconsistent chronic condition documentation, frequent modifier use flagged by payers, or historical coding patterns that deviate from peer norms; all of which increase exposure in healthcare coding and compliance audits.

Q: What key metrics should RCM and coding leaders track for audit readiness?

A: Meaningful metrics include: percentage of audited charts with documentation deficiencies, rate of coding variances per provider or service line, number of ML-flagged high-risk encounters, and reduction in internal audit exceptions over time. These metrics directly support audit defensibility and compliance posture.

Q: Can machine learning help with healthcare coding and compliance audits?

A: Yes. ML can interpret large volumes of clinical data, flag documentation-coding mismatches, prioritize high-risk encounters for review, and log decision trails for audit defense. It must be integrated within governance frameworks to ensure compliant, traceable outcomes.

Rejones Patta

Rejones Patta is a knowledgeable medical coder with 4 years of experience in E/M Outpatient and ED Facility coding, committed to accurate charge capture, compliance adherence, and improved reimbursement efficiency at RapidClaims.

Latest Post

expert insights with our carefully curated weekly updates

Related Post

Top Products

%201.png)