Machine Learning Applications in Healthcare: Opportunities and Risks

Healthcare organizations are being asked to do more with less. Coding teams are navigating complex ICD-10, CPT, and HCC v28 requirements while managing growing documentation volumes, staffing shortages, and tighter reimbursement controls. When documentation or coding falls short, the financial impact is immediate. CMS reports a 7.66 percent improper payment rate in Medicare Fee-for-Service for 2024, totaling $31.7 billion, driven largely by documentation and coding gaps.

As payer scrutiny increases, even small inconsistencies in clinical detail can trigger rework, delayed payments, or audit risk. Manual review alone cannot keep pace with today’s encounter volumes and evolving coding rules. That is where machine learning applications in healthcare are gaining traction.

By analyzing clinical narratives alongside structured data, machine learning helps teams identify documentation gaps, flag coding risk, and focus attention where accuracy matters most. This article explores the most impactful machine learning applications in healthcare, the opportunities they create for coding and revenue cycle performance, and the risks organizations must manage to adopt them responsibly.

Key Takeaways

- Documentation volume, coding complexity and denial pressure continue to rise, increasing the workload and accuracy demands on coding and RCM teams.

- ML identifies documentation gaps and coding risks by analyzing both structured data and narrative clinical text in real time.

- The strongest impact comes when ML is applied at targeted workflow decision points rather than across all encounters.

- High-value ML use cases include risk adjustment support, coding specificity checks, clinical NLP for EHR narratives, denial prediction and focused documentation improvement.

- Effective ML programs require governance that addresses data quality, audit transparency, fairness, HIPAA compliance and ongoing performance tuning.

- Successful adoption depends on clear use cases, harmonized data across care settings, embedded insights in coder and QA workspaces and specialty-level performance monitoring.

- When integrated correctly, ML improves documentation clarity, code accuracy, review efficiency and overall revenue cycle reliability.

Key Challenges in Healthcare Coding and Documentation

Healthcare teams are navigating a level of operational complexity that continues to grow each year. Coders must interpret diverse clinical narratives, reconcile data across multiple EHR modules, and apply frequently updated ICD-10, CPT, and HCC v28 guidelines. At the same time, documentation often varies by provider, specialty, and encounter type, creating inconsistencies that make accurate coding and compliant claim submission difficult. These pressures stretch existing staff capacity and create downstream effects across billing, quality reporting, and financial performance.

Operational Pressures Impacting Coding and RCM Teams

- Fragmented clinical narratives: Encounter data often spans progress notes, discharge summaries, imaging reports, and external documents, forcing coders to reconcile multiple sources before finalizing codes.

- Variable provider documentation patterns: Differences in phrasing, abbreviations, and template use require coders to interpret inconsistent clinical language, increasing the risk of missed conditions or incorrect sequencing.

- High-volume chart backlogs: Surges in outpatient visits, telehealth encounters, and specialty consults create backlogs that delay billing cycles and compress coder review time.

- Frequent guideline updates: Annual ICD-10-CM changes, quarterly CPT updates, and CMS HCC v28 revisions require continuous training and manual workflow adaptation.

- Pre-submission risk factors: Many denials originate from documentation gaps such as missing chronic condition linkage, unspecified diagnoses, or incomplete procedure details; issues that are difficult to catch manually at scale.

- Limited visibility into real-time coding quality: Traditional QA workflows often occur post-submission, meaning errors are caught only after denials or audit findings, which increases rework and financial exposure.

What Machine Learning Means for Healthcare Operations

Machine learning in healthcare is most useful when it reflects how clinical and operational teams interpret information by identifying patterns across varied data sources. Instead of relying on fixed rules, ML models adapt to differences in provider wording, specialty workflows, and historical documentation trends. This helps them interpret clinical intent even when documentation is incomplete, inconsistent, or dispersed across multiple parts of the EHR.

Core ML and Clinical NLP Concepts Relevant to Healthcare Workflows

- Pattern recognition: ML models detect statistical relationships between clinical findings, orders, lab trends, and diagnoses.

- Classification models: These models estimate the most probable diagnosis category, procedure type, or coding outcome based on available indicators.

- Sequence models: These models interpret clinical narratives where the order of terms affects meaning such as acute versus chronic or confirmed versus ruled out.

- Clinical NLP: Domain-specific NLP interprets free-text notes and can understand abbreviations, shorthand, and specialty language that general NLP tools often misread.

- Contextual embeddings: These models learn relationships between terms across multiple encounters, giving awareness of chronic conditions, disease progression, and prior documentation patterns.

Benefits of ML in Coding and Revenue Cycle Performance

Machine learning adds value when it strengthens the specific workflow points that shape documentation quality, coding accuracy and claim performance. The benefits below reflect measurable operational impact rather than broad advantages.

- Early Detection of Documentation and Coding Risks: ML identifies missing qualifiers, inconsistencies and incomplete condition relationships before coding is finalized, reducing secondary reviews and preventable denials.

- More Consistent Code Assignment Across Specialties: By learning documentation patterns across departments, ML helps standardize interpretation of clinically relevant details, reducing variation in code selection.

- Improved Capture of Chronic and High-Impact Conditions: ML analyzes longitudinal clinical signals to flag conditions that need clarification for accurate risk adjustment, helping prevent overlooked diagnoses that affect reimbursement.

- Less Time Spent on Routine Verification Tasks: ML automates checks for common issues such as missing specificity or misaligned narrative and structured data, allowing coders and QA teams to focus on cases that need expert judgment.

- Smoother and More Predictable Claim Preparation: ML highlights encounter elements likely to attract payer scrutiny, enabling teams to resolve issues during coding instead of after submission.

Operational Visibility Into Workflow Gaps: ML detects patterns such as delayed chart closure or inconsistent charge capture timing, giving leaders actionable insight into where operational delays affect revenue cycle performance.

More Defensible and Evidence-Driven Decisions: ML shows which clinical elements support its flags, helping coders and QA teams make consistent, audit-ready decisions backed by clear documentation signals.

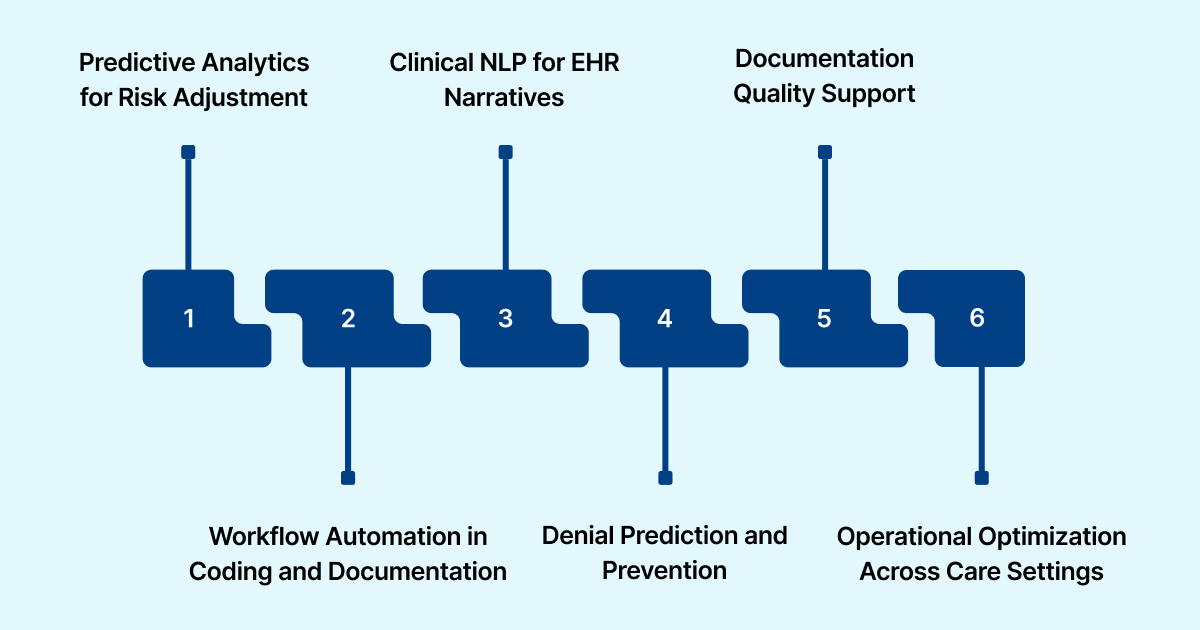

High-Value Machine Learning Applications in Healthcare

Healthcare operations generate clinical and administrative signals that coders, QA teams and revenue cycle staff often evaluate manually. Many of these signals reveal underlying patterns that influence coding accuracy, condition capture and claim outcomes, yet they are difficult to detect consistently across large encounter volumes. Machine learning identifies these patterns in real time and helps teams focus on areas where clinical detail, encounter complexity or historical payer behavior may influence the final claim. The following applications show where ML creates the strongest operational impact.

1. Predictive Analytics for Risk Adjustment

ML reviews longitudinal encounter data to identify signs of active conditions that may need clarification under HCC v28. It highlights clinical indicators that suggest ongoing treatment needs, helping teams focus on encounters where incomplete documentation could shift a patient's risk profile.

2. Workflow Automation in Coding and Documentation

ML detects clinical details that influence code specificity or sequencing and identifies inconsistencies between narrative notes and structured fields. This directs coders to areas that require expert interpretation and reduces time spent on low-value verification tasks.

3. Clinical NLP for EHR Narratives

ML-driven NLP interprets complex narrative text and extracts meaningful clinical insights that impact coding and quality reporting. It captures subtle changes in condition status or clinical reasoning that may not appear in structured data.

4. Denial Prediction and Prevention

ML analyzes encounter patterns linked to prior payer decisions and flags cases likely to face scrutiny. Teams receive early notice of documentation or coding elements that require reinforcement, which strengthens claims before submission.

5. Documentation Quality Support

ML pinpoints specific gaps in the record such as unclear disease status or incomplete condition relationships. It directs attention to the exact parts of the encounter that need clarification without expanding review workload.

6. Operational Optimization Across Care Settings

ML identifies workflow issues that affect coding accuracy and claim timing. Examples include delayed record finalization, variations in documentation habits across departments and inconsistent charge capture behavior. Leaders use these insights to refine processes that impact revenue performance.

Risks and Governance Requirements for ML Programs

Machine learning introduces new points of oversight for revenue cycle and compliance teams. These considerations focus on how ML interacts with real encounter data, how outputs influence coding and documentation decisions and how organizations maintain control as models evolve. The goal is to ensure that ML strengthens accuracy without creating gaps in accountability or audit readiness.

Bias and Fairness in Clinical Interpretation

ML may reflect historical documentation habits that vary between specialties or facilities. Governance teams must routinely compare model output across patient groups and service lines to confirm that condition detection and documentation flags remain consistent and free of unintended variation.

Model Transparency and Audit Alignment

RCM and compliance teams need clear visibility into how ML-generated suggestions were formed. Auditors often request evidence that clinical details support coded conditions or risk scores. An ML system must provide traceable reasoning that shows which clinical elements influenced each recommendation.

Data Governance and HIPAA Controls

ML relies on combined data from multiple clinical and financial systems. Differences in encounter attribution, timestamps or documentation structure can affect model training if not managed carefully. Organizations must maintain reliable data lineage and enforce access controls to support HIPAA compliance and accurate model behavior.

Workflow Integration and User Adoption

ML outputs lose effectiveness when they appear outside the tools coders and QA teams already use. Insights must be presented within the existing workflow to prevent parallel review processes. Alignment with established screens, queues and review steps is essential for adoption.

Performance Drift and Ongoing Validation

Clinical language, payer requirements and internal documentation habits change over time. ML models require periodic evaluation to confirm that outputs remain aligned with current standards. Governance teams should track performance across specialties and update configurations when patterns shift.

Explore how ML-driven coding support can reduce rework, strengthen documentation integrity, and improve financial performance. Request a RapidClaims demo tailored to your workflow.

How to Adopt Machine Learning Responsibly

Organizations get the most value from ML when they align it with the exact points in the workflow that influence coding accuracy, documentation clarity and claim outcomes. Responsible adoption requires focused goals, clean data inputs and continuous operational oversight.

Assess Workflow Readiness Before Deployment

Teams should review how encounters move from clinical documentation to coding and QA. This helps identify the steps where ML can meaningfully support decision-making, rather than inserting alerts where they add little value.

Define Specific, Measurable Use Cases

ML should address tightly scoped issues such as improving specificity for certain diagnoses, reducing specialty-driven variability or stabilizing review workloads in high-volume service lines. Clear boundaries prevent unnecessary alerts.

Align Data Across Care Settings

ML relies on consistent inputs. Organizations must reconcile differences in documentation templates, terminology and encounter structure across inpatient, outpatient and specialty systems so the model reads information accurately.

Embed ML Guidance in Existing Work Surfaces

Insights should appear directly in the coding panel, documentation view or QA workspace. Keeping ML within current tools ensures that recommendations are acted on during normal review steps.

Review Model Behavior With Coding and QA Leads

Specialty leads should routinely check whether ML flags are clinically meaningful and aligned with real coding logic. Regular validation helps refine ML to reflect staff expectations and actual encounter patterns.

Measure Results at the Specialty Level

Impact should be tracked using specialty-specific metrics such as coding precision for complex diagnoses, reviewer time per encounter or clarification requests. This reveals where ML is improving accuracy and where tuning is needed.

How ML Enhances Coding and Revenue Cycle Workflows

Machine learning improves revenue cycle performance by guiding coders and QA teams at the points in the workflow where decisions directly affect coding accuracy and claim quality. It evaluates how clinical information is introduced, updated and finalized throughout the encounter, allowing staff to address issues before they shape the final claim.

Prioritizing Encounters That Need Closer Review

ML identifies encounters with subtle inconsistencies such as incomplete condition progression, missing qualifiers or mismatched clinical impressions. This helps teams focus time on cases where accurate interpretation has the greatest impact.

Improving Consistency Across Specialties

ML highlights clinical indicators that should be evaluated uniformly across departments, reducing variation in code assignment caused by specialty-specific documentation habits.

Aligning Codes With the Full Clinical Picture

ML detects when late-added results or narrative details imply greater specificity than the assigned codes reflect. This helps coders adjust selections to match the complete encounter.

Supporting QA With Targeted Case Selection

ML identifies encounters with characteristics linked to coding errors or payer questions, enabling QA teams to concentrate on cases with the highest risk rather than relying on broad sampling.

Strengthening Throughput Without Rushing Reviews

ML reduces time spent on low-value checks and directs coders to the portions of the record that require expert interpretation. This improves throughput while maintaining documentation integrity and compliance.

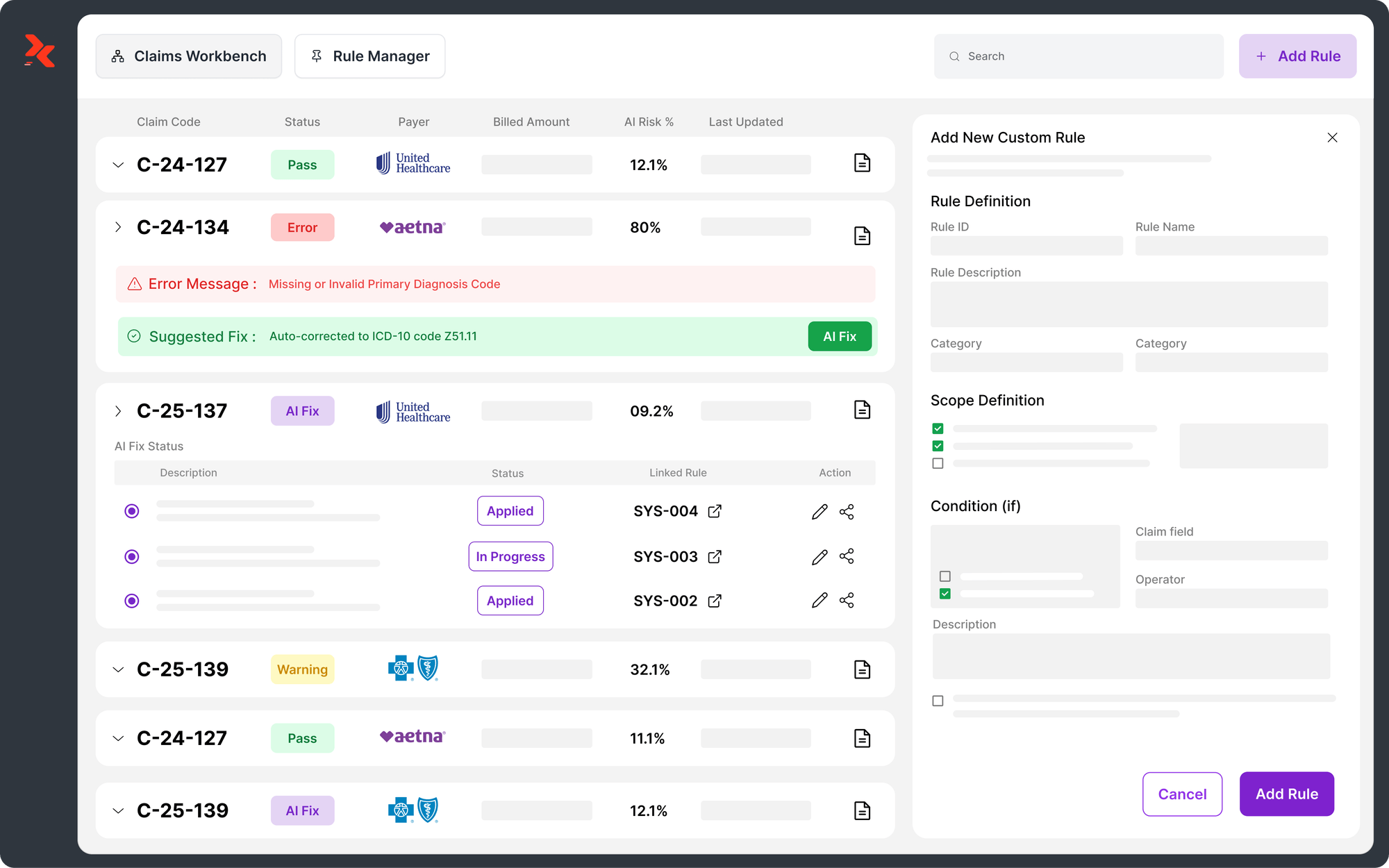

How RapidClaims Applies ML to Real Coding Challenges

RapidClaims applies machine learning at the points in the workflow where coding accuracy, documentation clarity and risk adjustment depend on precise interpretation of clinical detail. The platform evaluates encounter information as it develops and provides guidance that supports the decisions that shape final claim quality.

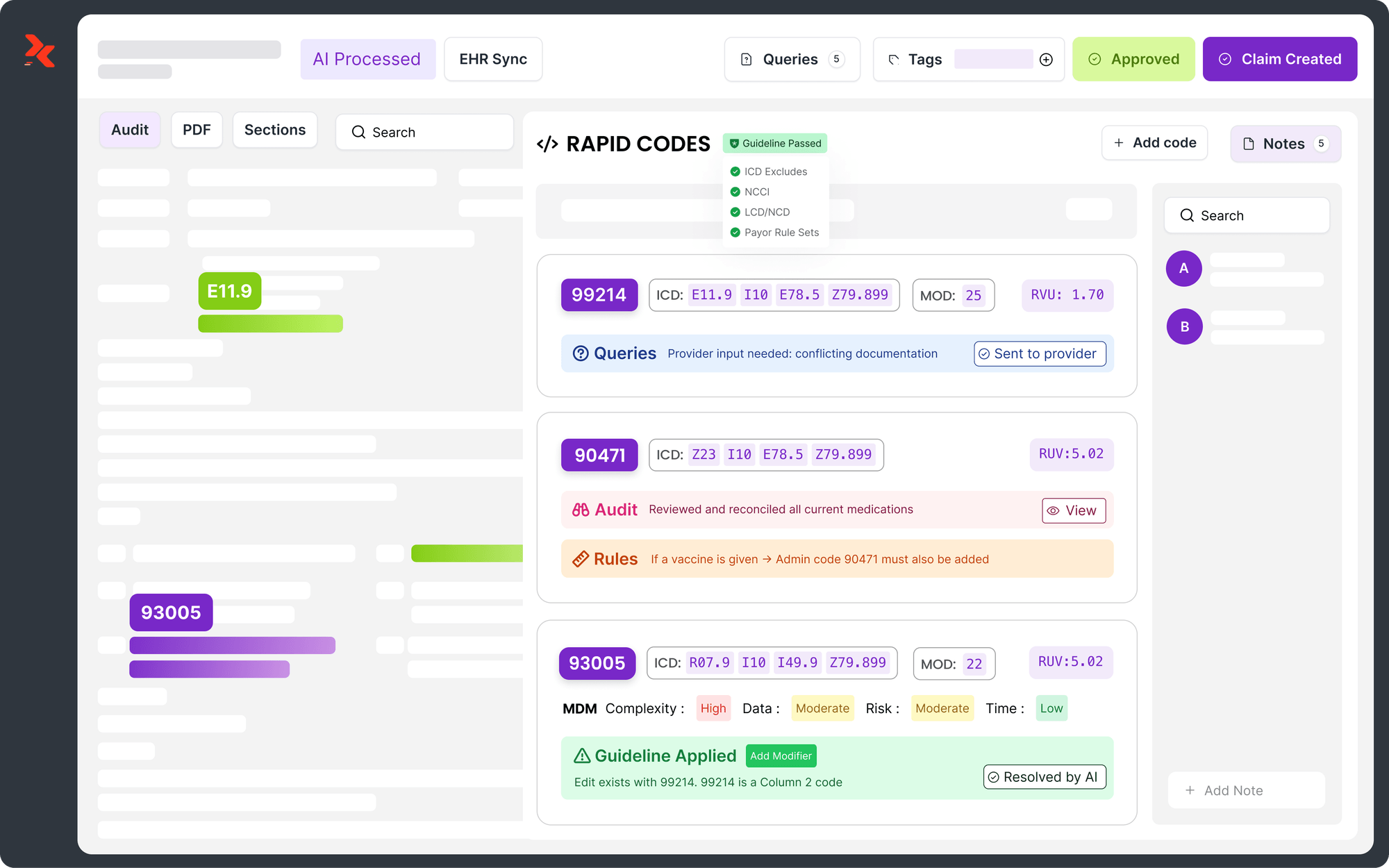

RapidCode: ML-Supported Code Identification

RapidCode analyzes clinical elements across the encounter and highlights details that influence code specificity or sequencing. It draws attention to patterns that reflect disease progression, procedural context or diagnostic clarity so coders can assign accurate codes based on the full clinical record.

RapidAssist: Documentation Clarity and Support

RapidAssist reviews how information is distributed across notes, assessments and ordered services. It identifies narrative gaps, conflicting statements or missing supporting detail and directs teams to the exact areas where clarification will strengthen the record.

RapidRecovery: ML Guided Denial Recovery Intelligence

RapidRecovery applies machine learning to analyze historical denial patterns, payer behavior, and encounter characteristics to identify recoverable claims. It helps teams prioritize high value appeals, align supporting documentation with payer requirements, and feed denial insights back into upstream coding and documentation improvement efforts.

Pre-Submission Accuracy Insights

RapidClaims identifies encounter characteristics that often lead to payer questions and highlights areas that may require additional detail or clarification. This helps teams refine documentation and coding while the record is still in progress.

Integrated Workflow Experience

RapidClaims presents its insights inside the same workspace coders and QA teams already use. This ensures that ML guidance fits directly into existing review processes and supports actions at the moment they matter most.

Need a deeper assessment of how ML can enhance your coding and audit readiness? Connect with our team for a strategic review of your current processes and improvement opportunities.

Conclusion

Machine learning is most effective when it supports the specific points in the workflow where documentation quality and coding accuracy influence financial outcomes. By guiding teams toward the encounters and clinical details that matter, ML strengthens decision-making without adding new administrative cycles.

RapidClaims brings this approach directly into coding and QA work. The platform analyzes encounter data in real time, surfaces clinically meaningful signals and helps staff resolve issues while the record is still active. This leads to clearer documentation, more accurate code selection and stronger performance across specialties.

To see how RapidClaims can support your organization’s RCM and coding operations, request a tailored demo.

FAQs

Q: What data sources do healthcare ML models use to improve coding accuracy?

A. Models draw on structured fields such as diagnosis and procedure codes, lab results, medication profiles and encounter metadata, combined with unstructured clinical text like provider notes and consult reports. Integrating these sources allows ML to surface missing condition qualifiers or inconsistent documentation that often affects claim outcomes.

Q: How can ML help reduce claim denials before submission?

A. ML identifies encounter-level patterns that have historically led to payer review, such as missing clinical justification or mismatches between narrative documentation and coded diagnoses. These early signals help coding and QA teams clarify documentation or adjust coding decisions before submission.

Q: What kind of governance is required when deploying ML in the healthcare revenue cycle?

A. Governance should cover data quality and lineage across systems, clear audit trails that link ML suggestions to clinical evidence and periodic specialty-level performance reviews to detect model drift, bias or misalignment with current documentation expectations.

Q: Can ML handle documentation from multiple specialties with different workflows?

A. Yes. When trained on multi-specialty encounter data, ML models can interpret variations in documentation style and highlight relevant clinical indicators consistently across departments. This reduces variability in coding interpretation and supports standardized accuracy across the organization.

Q: What steps should a health system take to integrate ML into existing coding workflows without disrupting operations?

A. The organization should map existing coding and documentation flows to identify where ML adds meaningful support, select focused use cases, harmonize data across inpatient, outpatient and specialty systems, embed ML insights directly into coder and QA workspaces and monitor impact by specialty and encounter type.

Rejones Patta

Rejones Patta is a knowledgeable medical coder with 4 years of experience in E/M Outpatient and ED Facility coding, committed to accurate charge capture, compliance adherence, and improved reimbursement efficiency at RapidClaims.

Latest Post

expert insights with our carefully curated weekly updates

Related Post

Top Products

%201.png)