The Ethical Considerations of AI in Medical Coding: Balancing Automation with Human Oversight

Artificial intelligence is quickly becoming foundational for effective Revenue Cycle Management (RCM). With automation and analytics eliminating $200 billion to $360 billion of spending in US healthcare, organizations increasingly rely on these tools to manage claim complexity, reduce errors, and sustain financial health.

However, this push for automated accuracy introduces new governance challenges. The core concern is simple: Does efficiency compromise integrity? For healthcare operations, IT leaders, and compliance officers, adopting AI requires establishing strict ethical parameters.

This blog outlines the key ethical considerations of AI in medical coding and provides actionable strategies for its responsible integration.

Key Takeaways

- Protect Patient Data from Secondary Use: Prohibit AI vendors from using your patient data for model training to avoid major HIPAA violations and unauthorized use.

- Mandate Performance Equity: Always test AI accuracy and denial rates across different demographic subgroups to proactively prevent algorithmic bias in billing outcomes.

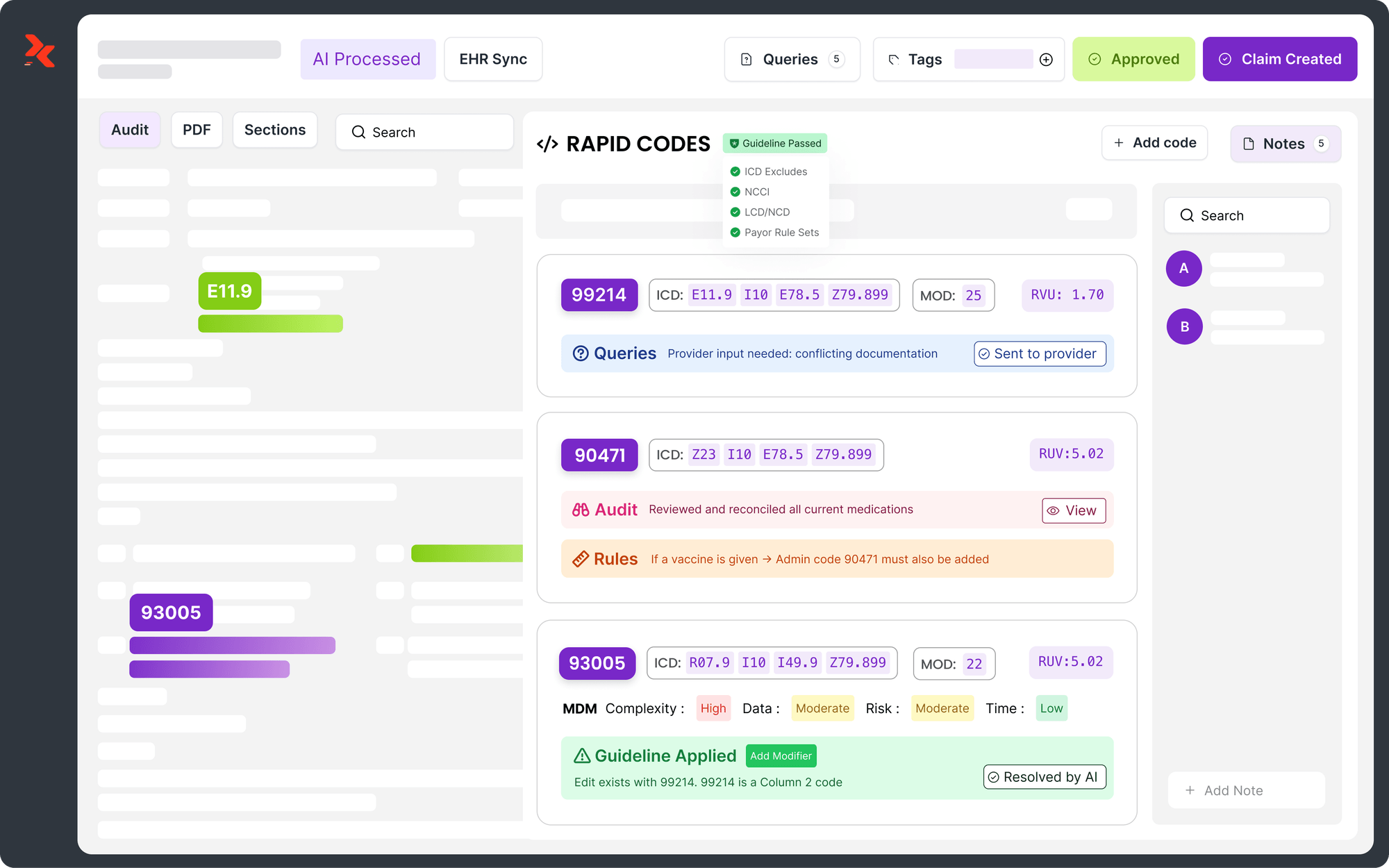

- Ensure Step-by-Step Explainability: Demand that the AI provide a clear, auditable justification for every code it suggests, eliminating the compliance risk of "black box" decisions.

- Keep Human as Final Authority: Establish policies where the human coder remains the legally responsible party for the final code and must log reasons for overriding AI suggestions.

- Be Transparent with Patients: Clearly communicate the use of AI in your RCM process to uphold patient autonomy and maintain trust beyond the basic TPO requirements.

Table of Contents

- Upholding Data Security and Privacy

- Mitigating Algorithmic Bias and Ensuring Fairness

- Ensuring Transparency and Explainability

- Establishing Accountability and Human Oversight

- Addressing Informed Consent and Patient Rights

- Conclusion

- FAQs

Upholding Data Security and Privacy

Medical coding systems process vast quantities of sensitive patient information. For RCM professionals, safeguarding this data and adhering to the HIPAA Privacy and Security Rules is non-negotiable. Failure to maintain rigorous controls over this data leads to massive financial penalties, loss of patient trust, and potential legal liability for the organization.

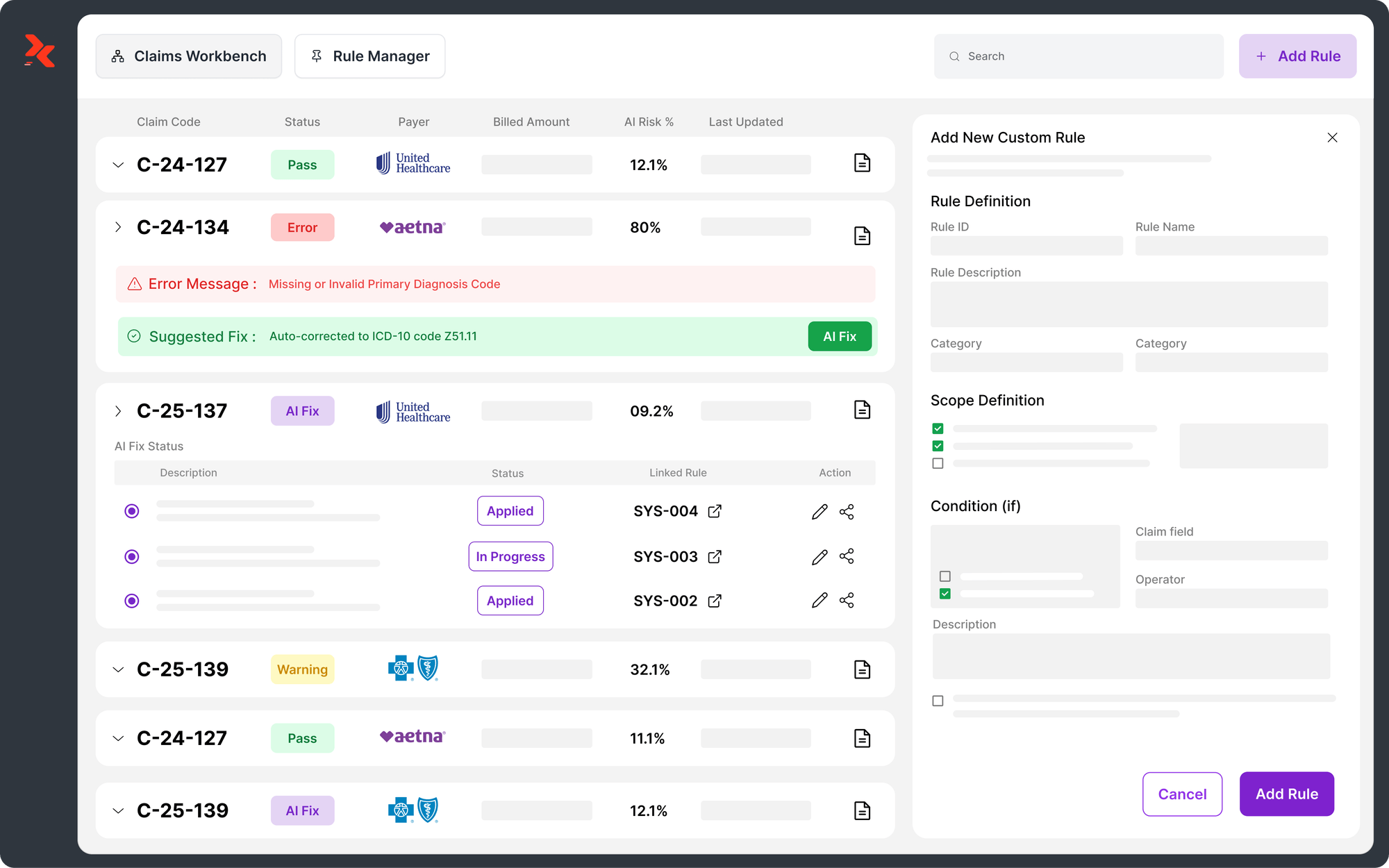

The Challenge

The sheer volume of data required to train and run accurate AI models introduces new risks that traditional systems don't face, escalating compliance anxiety:

Alt text:The Challenge

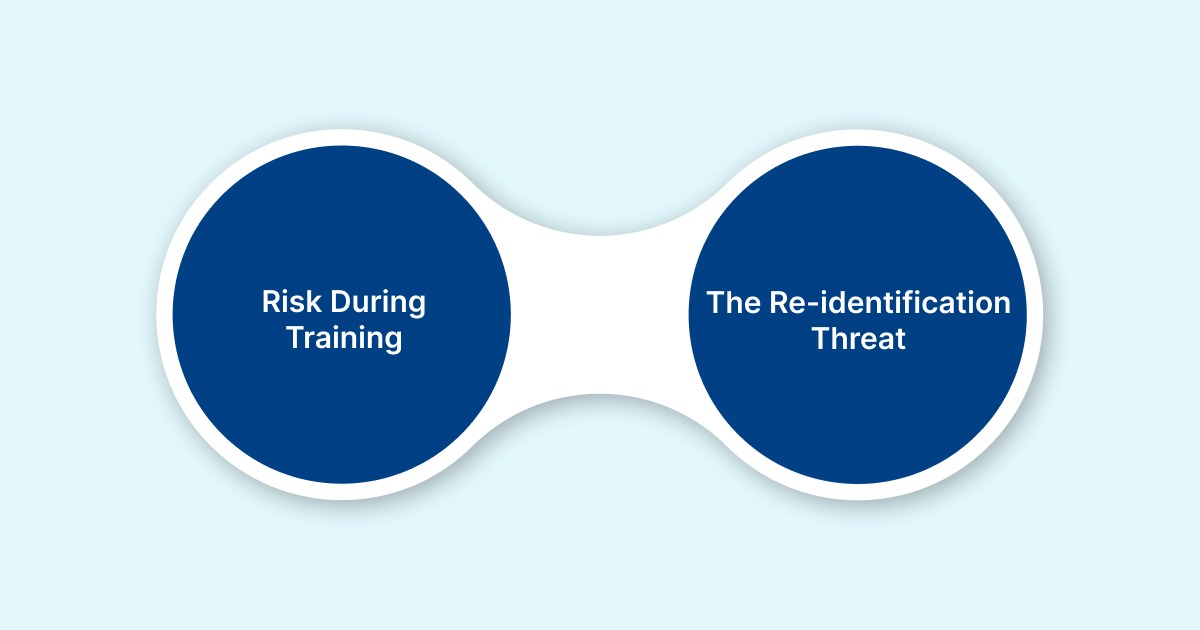

- Risk During Training (Secondary Use): AI algorithms are data-hungry. If your organization's sensitive data is inappropriately used by a third-party vendor to refine their commercial model, it constitutes unauthorized secondary use, leading to major HIPAA violations.

- The Re-identification Threat: AI’s power to recognize complex data patterns means even information that has been formally stripped of obvious identifiers can potentially be cross-referenced and linked back to individual patients. This re-identification risk challenges the integrity of de-identified data used in research or model development.

Solutions like RapidClaims are designed to deploy autonomous agents that learn clinical patterns while maintaining full security and auditability.

How to Govern AI Data Usage

To transition from policy to practical defense, compliance officers should focus on these implementable controls:

- Enforce Data Minimalism: Codify the "minimum necessary" standard in your AI policies. Configure the automated tools to access and pull only the clinical text and data fields required for coding and billing. Do not grant access to unnecessary portions of the patient record.

- Ensure Patient Transparency and Consent: Be transparent with patients about how AI tools are involved in processing their data for billing and operations. Ensure organizational policies align with obtaining clear patient authorization for any data use beyond routine treatment, payment, and healthcare operations (TPO).

- Implement Auditable Access: Secure the AI system's interface and data logs with Role-Based Access Control (RBAC) and Multi-Factor Authentication (MFA). This ensures that only authorized coders and managers can validate the AI's inputs and outputs, while all activity is logged to create a clear audit trail.

By following these strategies, organizations can ensure AI systems are powerful operational tools that rigorously safeguard the patient data entrusted to them.

Mitigating Algorithmic Bias and Ensuring Fairness

AI algorithms in medical coding are designed to learn from historical patient data. However, if this training data reflects existing systemic disparities, AI will learn and perpetuate that bias. This leads to unfair financial and clinical outcomes, such as incorrect coding, improper claim denials, or inaccurate risk scoring for certain patient populations.

The Challenge

The central challenge is that bias isn't programmed into the AI; it is encoded in the data it learns from.

- Data Skew: If the training dataset contains a majority of records from one demographic group (e.g., higher-income patients or certain geographic regions), AI will perform less accurately when processing records for underrepresented groups.

- Proxy Variables: Even if protected attributes like race or gender are removed, other variables (like zip code or insurance type) can act as proxies, allowing the algorithm to continue making biased decisions without explicitly using the protected data.

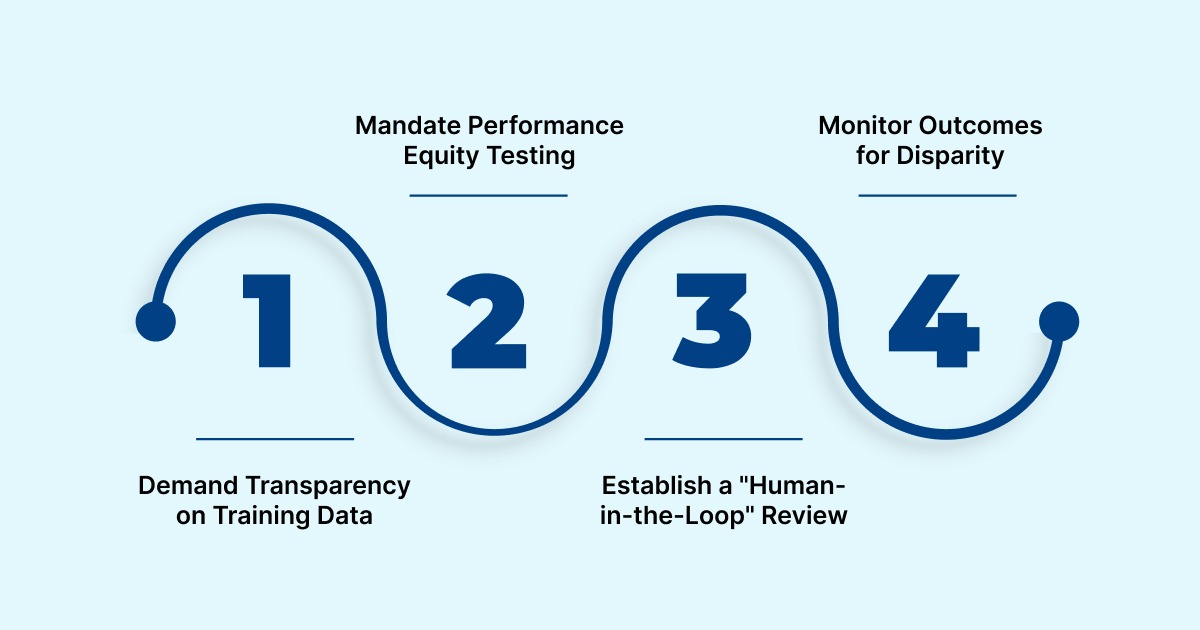

How to Prevent Algorithmic Bias

To ensure the ethical operation of AI systems, RCM leaders must enforce the following strategies:

Alt text:How to Prevent Algorithmic Bias

- Demand Transparency on Training Data: Require AI vendors to disclose the composition and diversity of the data used to train their models. This allows your team to assess if the model is likely to perform reliably and fairly across your entire patient population.

- Mandate Performance Equity Testing: Do not settle for "overall accuracy." Require the AI system to be tested for accuracy and denial rates across different demographic subgroups (e.g., race, age, payer type) to identify and correct disparate impacts before deployment.

- Establish a "Human-in-the-Loop" Review: Design workflows where human coders and auditors are required to review and validate claims or codes flagged by the AI as complex or high-risk. This provides an essential human oversight layer to check for and override potentially biased automated decisions.

- Monitor Outcomes for Disparity: Continuously monitor financial and coding outcomes, specifically tracking claim denial rates or up/down-coding patterns by demographic. A sudden spike in denials for a specific group signals that algorithmic drift or bias needs immediate investigation and mitigation.

Consider how RapidScrub™ provides proactive denial prevention with a 93% clean claim rate, giving teams confidence in the system's verifiable accuracy.

Ultimately, achieving fairness requires human governance to ensure AI-driven RCM supports equitable outcomes for every patient.

Ensuring Transparency and Explainability

When AI systems determine a patient's bill, a claim's status, or a provider's risk profile, stakeholders must understand how those outcomes were reached. This need for transparency is critical because it underpins both trust and accountability.

The Challenge

The core risk lies in the complexity of modern machine learning models, which often operate without clear, step-by-step reasoning:

- The Black Box Problem: Advanced AI models (like deep learning networks) deliver highly accurate coding suggestions but cannot easily articulate why they chose a specific code over another. The output is clear, but the process is opaque.

- Audit Trail Deficiency: Without clear documentation of the factors (e.g., specific phrases in the clinical note) the AI used to arrive at a code, proving compliance during an audit or justifying an appeal to a payer becomes extremely difficult, increasing risk exposure.

How to Achieve AI Accountability

To move from opaque AI output to verifiable, accountable decisions, managers should implement these strategies:

Alt text:How to Achieve AI Accountability

- Mandate Human Validation Checkpoints: Do not allow AI to operate on full autopilot. Establish specific checkpoints where human coders must review and validate any AI-suggested code that changes the level of service, results in a high-value claim, or falls outside typical coding patterns.

- Require Granular Audit Logging: Ensure the AI system logs not just the final code, but the intermediate steps and the specific patient record data the algorithm accessed and weighted. This granular log is the verifiable audit trail needed to defend a claim in an appeal or regulatory review.

- Train Staff on AI Logic: Provide coders and auditors with specific training on the AI tool's interpretation methods. Staff should be equipped to explain to a payer or a patient why a specific code was generated based on the clinical documentation and the AI's transparent reasoning.

By demanding clear, verifiable, and documented reasoning for every coding output, organizations ensure that AI remains an accountable tool, eliminating the compliance risk associated with "black box" financial decisions.

Also Read: Becoming a Medical Coding and Billing Specialist: Steps to Get Certified

Establishing Accountability and Human Oversight

In automated systems, the line of accountability blurs when errors occur. Since the healthcare organization holds the final legal and ethical responsibility for all billing decisions, relying on AI without a defined Human-in-the-Loop (HITL) framework represents an unacceptable regulatory and financial risk.

The Challenge

The greatest danger in this area is a concept known as "automation bias," where human staff over-rely on the AI's suggestions, failing to apply their professional judgment.

- Loss of Skill: Coders may become de-skilled and lose their ability to spot non-obvious errors if they stop critically reviewing AI outputs.

- The Delegation Trap: Management may incorrectly assume that because the AI is "always right," they can remove expert human review steps, leading to systemic errors that are only caught during a costly external audit.

How to Enforce Human Accountability

Effective governance requires clear policies that define the roles of both the human expert and the automated tool:

- Define Final Decision Authority: Clearly establish that the human coder or auditor remains the responsible party for the final submitted code, regardless of the AI's initial suggestion. AI is a tool, not a decision-maker.

- Implement Mandatory Review Points: Structure the RCM workflow so that human oversight is required for specific high-risk scenarios, such as:

- Any claim that results in a significant up-coding or down-coding from the previous code set.

- Unusual procedure-diagnosis pairings flagged as high-risk by the compliance department.

- New coding combinations that the AI has never encountered before.

- Invest in Continuous Training: Regularly train coding staff not just on how to use AI, but how to critique its suggestions, understand its potential biases, and document instances where they override AI's recommended code.

- Maintain an Override Log: The system must record every instance where a human coder overrides an AI suggestion, along with the human's reason. This log provides crucial evidence that professional judgment and oversight are actively being enforced.

By defining clear roles and enforcing mandatory, documented human review, organizations ensure that AI remains a supportive tool and that accountability always rests with the human experts.

Also Read: AI-Powered Automation in Medical Coding

Addressing Informed Consent and Patient Rights

The use of AI in coding and billing must respect patient autonomy. While HIPAA often covers this under routine Treatment, Payment, and Operations (TPO), the ethical imperative demands greater clarity. Patients have a fundamental right to understand which systems are processing their sensitive health data and influencing their financial obligations, ensuring trust is maintained.

The Challenge

The opacity of AI can lead to a breakdown of patient trust and confusion regarding their data rights:

- Erosion of Trust: If a patient is unaware that multiple third-party AI models are ingesting their data, it can be viewed as a violation of the underlying trust agreement, potentially leading to complaints or data access requests.

- Consent Scope Creep: There is a risk that data initially consented for billing purposes may be unintentionally used for secondary purposes, like model training or internal risk scoring, without proper authorization or de-identification.

How to Preserve Patient Autonomy

RCM and compliance teams must integrate patient communication into the AI framework:

Alt text:How to Preserve Patient Autonomy

- Ensure Full Transparency: Clearly communicate to patients, through your Privacy Notices, intake forms, or digital portals, that AI systems are utilized to process their clinical documentation and determine billing codes.

- Define Data Use Limits: Explicitly state in vendor contracts and internal policies that patient data is used only for the agreed-upon billing and RCM functions, and that it will not be shared or used by the vendor for general model training without a new, explicit patient authorization.

- Simplify Communication: Avoid technical jargon. Explain the AI's role simply: "We use an automated system to read your records and check billing codes for accuracy, speeding up your claim process."

- Establish an Inquiry Channel: Create a dedicated, easy-to-use pathway for patients to ask specific questions about how AI processed their health information or to object to its usage formally.

By adopting a proactive approach to patient communication and clearly defining the permissible scope of data use, organizations uphold the ethical duty to secure informed consent and maintain patient trust.

Conclusion

Integrating AI isn't just a technical update; it's about making a clear commitment to trust and responsibility. To succeed, organizations must intentionally set clear rules that put patient privacy, fairness in billing, and human oversight ahead of just speed. By making ethics a core part of automated workflows, RCM leaders build confidence and protect the financial integrity of their practices.

To achieve these high standards for ethical AI in medical coding, you need a system designed for both human control and strong compliance. RapidClaims offers a revenue cycle automation platform that works alongside your team and enforces complex payer rules instantly. We give you the transparency and control you need to achieve 100% audit compliance.

Contact RapidClaims today to see our ethical AI in action and start strengthening your compliance framework.

FAQs

1. What is the biggest non-ethical benefit of using AI in RCM?

The biggest benefit is denial prevention and faster cash flow. AI can accurately predict which claims are likely to be denied based on payer rules, allowing human staff to fix errors before the claim is submitted.

2. How does AI use big data while still following HIPAA's "minimum necessary" rule?

This is a challenge. Organizations must use techniques like federated learning or synthetic data for model training. For operations, the AI system should be configured only to access the minimum necessary text and data fields required for the specific coding task.

3. What is the difference between RPA and AI in medical coding?

RPA (Robotic Process Automation) handles simple, static rules (e.g., logging into a payer portal to check a claim status). AI (Artificial Intelligence) uses machine learning and natural language processing (NLP) to read and interpret complex, unstructured clinical notes to suggest codes.

4. Should we get our AI vendor's system checked for bias?

Yes, absolutely. You should require your vendor to demonstrate how their model was tested across diverse demographic data (age, race, socioeconomic status) to ensure it does not recommend unfair coding patterns for specific patient groups.

Rejones Patta

Rejones Patta is a knowledgeable medical coder with 4 years of experience in E/M Outpatient and ED Facility coding, committed to accurate charge capture, compliance adherence, and improved reimbursement efficiency at RapidClaims.

Latest Post

Top Products

%201.png)