Healthcare Generative AI for Coding and Audit Review Workflows

Healthcare organisations must deal with growing chart volumes, complex coding rules and rising documentation burdens. These pressures have accelerated interest in healthcare generative AI, which can interpret and transform unstructured clinical narratives into usable detail for coding and review. According to an industry report, in FY 2024 the estimated improper payment rate for traditional Medicare was 7.66 percent, representing approximately 31.70 billion dollars in avoidable costs. For enterprise teams aiming to reduce rework and strengthen documentation alignment, these new capabilities offer timely operational value.

Key Takeaways

- Healthcare generative AI improves how coding and review teams interpret clinical narratives, helping them identify critical detail that influences specificity, severity and audit defensibility.

- Documentation gaps are detected earlier, allowing coders and auditors to resolve missing or unclear information before a claim moves forward.

- Cross-encounter reasoning supports accurate chronic condition management, which is essential for risk based programs and consistent longitudinal documentation.

- Compliance teams gain clearer validation pathways, since generative AI provides traceable reasoning that aligns with CMS and payer expectations.

- RapidClaims operationalises these capabilities inside existing EHR and RCM workflows, giving organisations improved accuracy and reduced rework without changing reviewer processes.

Table of Contents:

- Market Shifts Driving Adoption of Healthcare Generative AI

- Core Capabilities of Healthcare Generative AI in Clinical Documentation Review

- Healthcare Generative AI Use Cases in Coding and RCM

- How to Implement Healthcare Generative AI in Review Teams

- Compliance and Governance Requirements for Healthcare Generative AI

- Measuring Operational Impact of Healthcare Generative AI

- How RapidClaims Applies Healthcare Generative AI

- What Is Next for Healthcare Generative AI in RCM

- Conclusion

- FAQs

Market Shifts Driving Adoption of Healthcare Generative AI

Healthcare organisations are entering a stage where documentation quality, coding precision and audit readiness directly influence financial stability. Instead of simply accelerating workflows, leaders are now prioritizing technologies that improve clinical interpretation and reduce downstream revenue risk.

Shifts shaping enterprise adoption

- Growing reliance on clinical narrative intelligence, not just extraction or prediction

- Increased payer-driven documentation specificity, especially for chronic conditions in HCC v28

- Rising demand for explainable AI outputs that auditors and compliance teams can validate

- Movement toward EHR-integrated coding augmentation, where AI supports review within native workflows

- Expansion of value-based care programs, which require accurate longitudinal documentation across encounters

Where organisations are focusing generative AI efforts

- Consolidating lengthy encounter notes into precise clinical summaries for coding review

- Identifying missed indicators that influence severity and reimbursement

- Strengthening documentation integrity programs by surfacing unclear or incomplete provider notes

- Improving consistency across multi-site physician groups that document differently

- Preparing teams for more frequent and more structured payer audits

Why health systems see strategic value

Generative AI is being adopted less as an automation tool and more as a clinical understanding layer that supports every downstream revenue-cycle step. Leaders are prioritizing platforms that translate narrative detail into coding-ready information, support compliance validation and plug into existing EHR and RCM systems without adding friction.

Core Capabilities of Healthcare Generative AI in Clinical Documentation Review

Traditional automation in healthcare focuses on extraction or rule matching. Healthcare generative AI expands this by interpreting meaning in clinical text, improving how reviewers interact with narrative-heavy documentation.

How generative AI processes clinical detail

Generative models evaluate the full clinical narrative, not just keywords or structured fields. This allows the AI to recognize:

- nuanced symptoms or progression patterns that influence code specificity

- physician reasoning embedded in long narrative sections

- clinical relationships across multiple encounters that affect chronic condition capture

This depth of interpretation provides coding teams with richer context before assigning final codes.

Why this matters for coding accuracy

Generative AI can articulate why a documented condition is clinically relevant. This supports coders and auditors who must justify specificity and severity during reviews. It also helps identify incomplete or ambiguous documentation that would otherwise lead to downcoding or denial exposure.

Capabilities traditional NLP cannot deliver

Generative systems can produce structured, reviewer-ready content such as:

- concise summaries aligned with coding guidelines

- clarification questions positioned to reduce provider back-and-forth

- narrative rationales that show how documentation supports a particular coding path

These outputs supplement human judgment and strengthen audit defensibility.

Supporting compliance requirements

Generative AI can explain its suggested interpretations in natural language. This allows compliance teams to validate:

- how the model linked evidence to a condition

- whether documentation meets payer expectations

- where additional detail is required for audit readiness

The focus shifts from automated extraction to transparent, reviewable reasoning.

See how RapidClaims uses generative AI to strengthen documentation and coding accuracy. Request a personalized demo today.

Healthcare Generative AI Use Cases in Coding and RCM

Generative AI is most effective when directed at specific gaps inside coding and documentation workflows. In many of these areas, healthcare generative AI provides the level of interpretation needed to support reviewer decisions.

Identifying silent indicators that shape code specificity

Many encounter notes include clinical context that affects specificity but is not explicitly documented as a condition. Generative AI can detect these implicit indicators, such as treatment patterns, diagnostic rationale or progression descriptors, and highlight them for coder review. This supports accurate code refinement without altering clinical documentation.

Detecting inconsistencies across multi-section clinical notes

Providers often describe the same condition differently across the HPI, assessment, procedure notes and discharge plan. Generative AI can compare these sections and flag contradictions or missing clarifications that influence coding decisions or audit vulnerability.

Strengthening linkage between conditions and underlying clinical evidence

Revenue cycle teams often struggle to validate whether documentation supports severity or complication codes. Generative AI can outline the clinical elements that justify a condition, helping coders verify evidence alignment before submission. This improves defensibility during payer audits.

Pre-submission checks for documentation integrity

Before a claim moves downstream, generative AI can evaluate whether the note contains the elements required for code validation, such as:

- specificity qualifiers

- relevant history or progression

- treatment linked indicators

- clarity around acute versus chronic presentations

This helps prevent denials tied to insufficient narrative detail.

Highlighting missing components for risk-based programs

Risk bearing organisations rely on consistent capture of chronic conditions across the year. Generative AI can detect when a condition was clinically managed during the encounter but not addressed within the documentation. The AI can then notify coding teams that the case requires review for potential omission.

Cross-encounter reasoning for longitudinal accuracy

When reviewing multiple visits, coders may miss changes in disease progression that affect severity classification. Generative AI can compare encounters across a time span and surface meaningful shifts that influence coding, helping teams maintain continuity for chronic disease categories.

Validation support for internal audits and second-level reviews

Audit teams can use generative summaries to quickly review how documentation aligns with coding decisions. The model can present side by side rationales that show:

- where documentation supports the assigned code

- where supporting evidence is thin

- where clarification is required for audit readiness

This shortens review cycles while preserving rigorous compliance standards.

Payer specific interpretation insights

Because documentation expectations vary across payers, internal teams spend significant time adapting coding or audit decisions. Generative AI can highlight passages that may be interpreted differently under common payer rule sets. This helps revenue cycle leaders prepare coders for potential disputes before submission.

How to Implement Healthcare Generative AI in Review Teams

Successful adoption of generative AI in coding and revenue cycle operations depends on how well it is introduced into existing clinical and administrative environments. The goal is to strengthen interpretation quality, reduce reviewer workload, and support audit ready documentation without disrupting established workflows.

Map high influence workflows before deployment

organisations should identify coding and documentation paths that create measurable downstream effects. These often include complex service lines, high denial categories, or encounters that require extensive narrative review. Prioritizing these areas helps teams observe impact quickly and refine model behavior through real world feedback.

Align model behavior with organisation specific coding standards

Every organisation maintains variations in coding preferences, escalation rules and documentation expectations. Generative AI can be configured to reflect these patterns so its outputs align with internal practice. This reduces noise for coders and ensures suggestions follow the organisation’s review sequence.

Integrate within established EHR and RCM touchpoints

Introducing generative AI at the correct interaction points allows teams to use insights without adjusting their workflows. Placement near clinical note review, audit checks or second level review ensures that coders and auditors receive context at the exact moment they evaluate documentation.

Build reviewer controls that guide model acceptance

Coders and auditors should be able to approve, edit or decline generative outputs in ways that capture reasoning for future improvement. This helps the organisation build a consistent feedback loop that strengthens the model’s clinical interpretation and keeps compliance validation transparent.

Coordinate governance with audit and compliance teams

Compliance leaders should be involved early to define which outputs can be used directly, which require manual validation and which should be excluded from certain encounter types. This ensures the organisation maintains audit ready oversight across all coding pathways.

Establish measurement plans before activation

Teams should define which metrics will demonstrate value for each use case. These can include coding clarification rates, reviewer time per encounter, accuracy adjustments during audits or changes in documentation completeness. Tracking these measures from the first week of deployment supports objective evaluation and informed scaling.

Want to improve audit readiness and reduce rework? Connect with RapidClaims to explore real-time AI insights inside your existing workflow.

Compliance and Governance Requirements for Healthcare Generative AI

Generative AI affects how documentation is interpreted, so governance must confirm that outputs remain compliant, reviewable and aligned with payer expectations.

- Maintain clear separation between AI insight and coder judgment: AI suggestions must stay advisory. Coders should confirm every interpretation and document the rationale for final code selection to preserve audit defensibility.

- Validate that model outputs reflect actual clinical evidence: Compliance teams should regularly review sample encounters to ensure the AI does not introduce unsupported conditions or overstated severity.

- Control which encounter types receive AI assistance: Some services, such as high acuity cases or notes with limited clinical detail, may require full manual review. Governance teams should define these rules early.

- Record how AI influenced each review step: Audit trails should capture what the model highlighted and how reviewers acted on it. This supports internal audits and payer inquiries.

- Update oversight rules as payer requirements shift: Payers frequently adjust documentation thresholds. Governance teams must sync rule updates with the AI review process so coding decisions remain aligned with current standards.

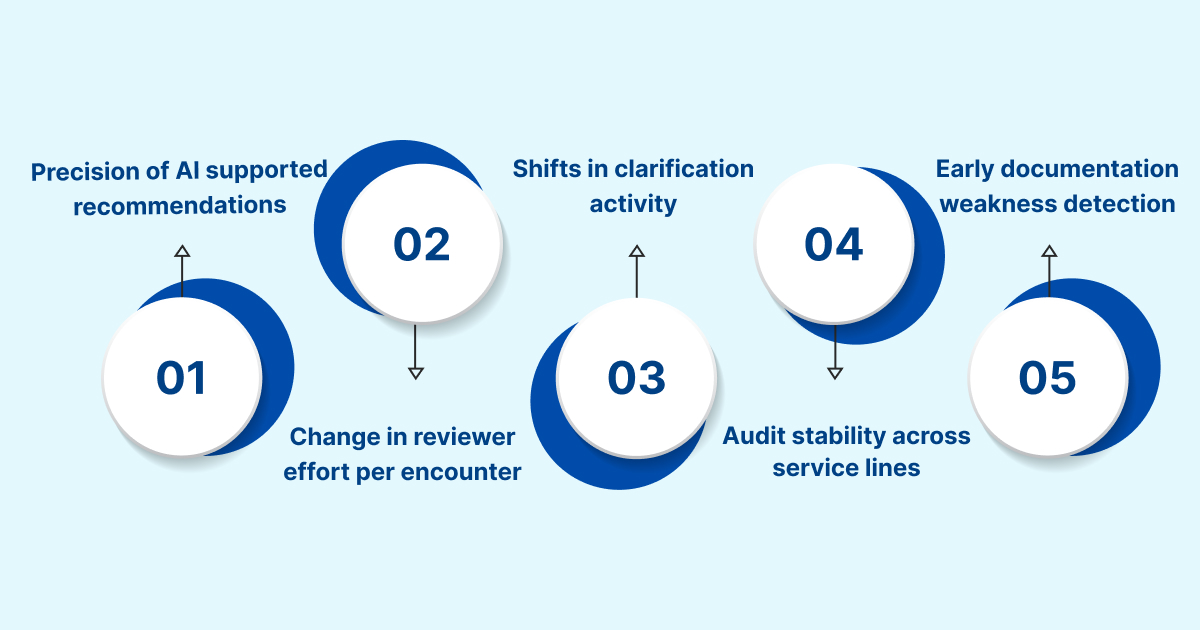

Measuring Operational Impact of Healthcare Generative AI

Measuring value requires focusing on indicators that reflect how well the AI improves interpretation quality, reduces rework and strengthens documentation alignment.

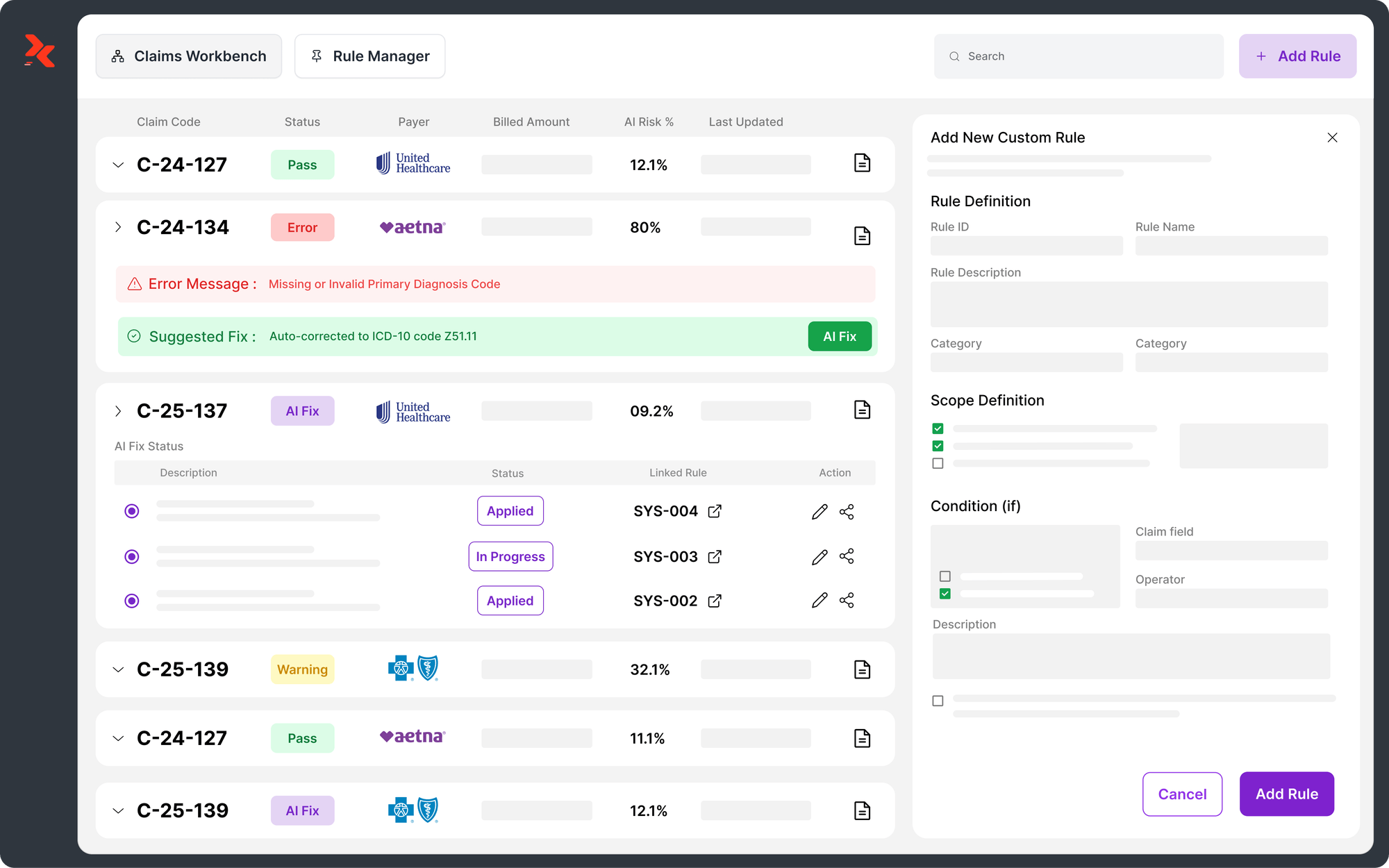

- Precision of AI supported recommendations: organisations can track how often reviewers accept, adjust or reject AI suggestions. High relevance indicates that the model understands clinical patterns accurately within that organisation’s documentation style.

- Change in reviewer effort per encounter: By comparing time spent on complex charts before and after AI activation, leaders can quantify how much cognitive load the model removes from coders and auditors.

- Shifts in clarification activity: A reduction in unnecessary coder to provider clarifications shows that documentation gaps are identified earlier and presented more clearly to reviewers.

- Audit stability across service lines: Improved consistency in internal audit scores signals that the AI is helping reviewers maintain uniform interpretation across clinicians who document differently.

- Early detection of documentation weaknesses: If issues are flagged before final coding, teams can track decreases in avoidable adjustments during second level review or compliance checks.

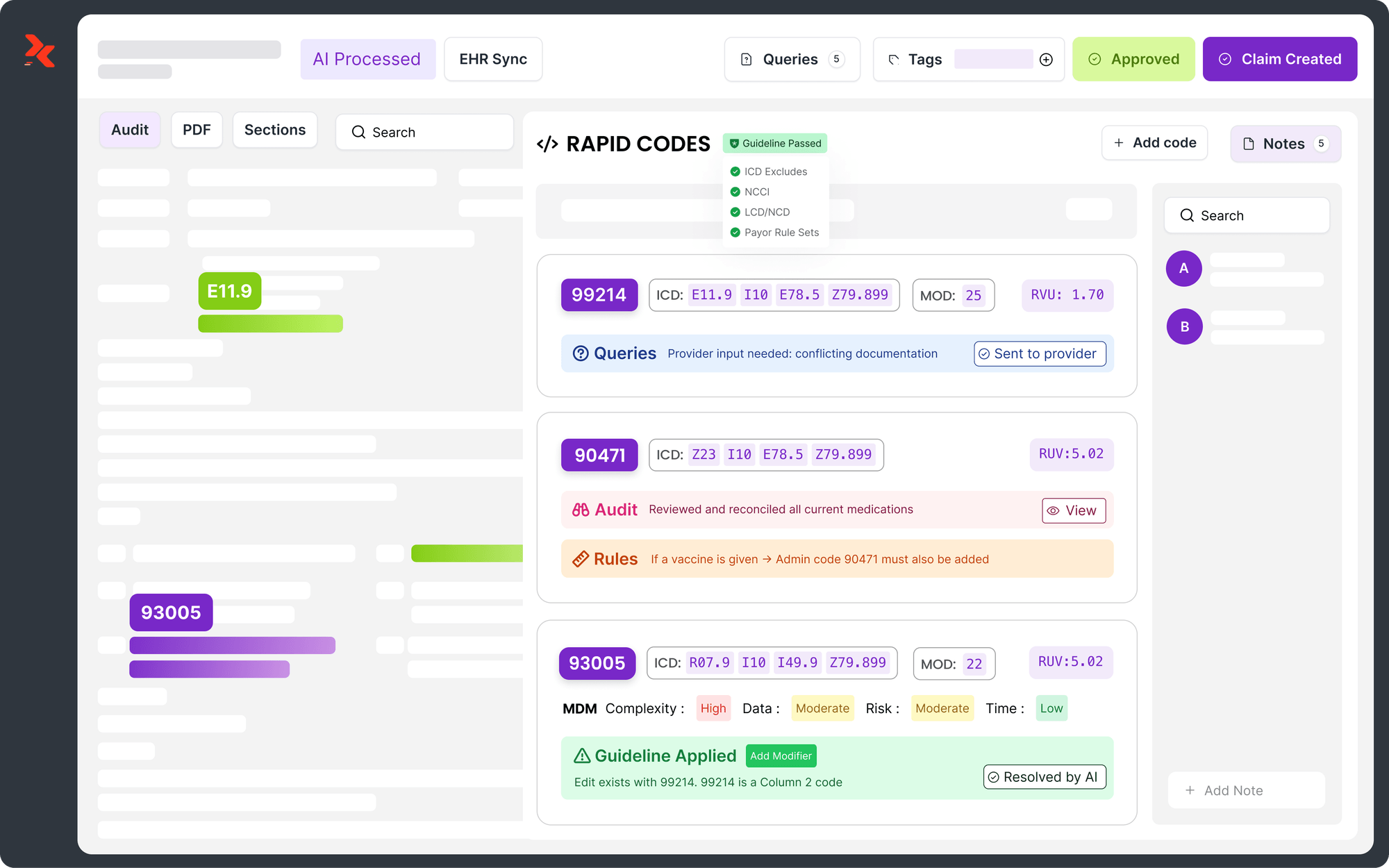

How RapidClaims Applies Healthcare Generative AI

RapidClaims applies healthcare generative AI to strengthen how teams interpret clinical narratives and prepare claims for submission, with a focus on clarity and audit ready documentation.

- Converts narrative heavy encounter notes into structured, review ready insights that help coders understand the clinical story with less manual parsing

- Flags parts of the note that lack the detail required for accurate coding so reviewers can resolve issues before a denial risk develops

- Detects subtle clinical elements that influence code specificity and presents them in a format that supports internal audit validation

- Identifies missed opportunities to capture chronic conditions during risk based reviews and directs attention to the relevant parts of the encounter

- Connects information across related visits to help teams recognize changes in disease progression that affect coding decisions

- Provides clear reasoning for each suggestion so coders and compliance reviewers can trace how the model interpreted the documentation

- Integrates into EHR and revenue cycle environments using standard data pathways to support adoption without changing established review steps

What Is Next for Healthcare Generative AI in RCM

The next phase of generative AI in revenue cycle operations will focus on strengthening interpretation depth and improving reviewer efficiency across complex encounters.

- Real time interpretation within clinical workflows: AI will begin surfacing interpretation insights while the clinical note is still being drafted, giving reviewers cleaner inputs without increasing provider workload.

- Context aware support for high variability specialties: Specialties with complex narratives, such as cardiology and oncology, will see models trained to understand nuanced disease trajectories that have direct coding implications.

- Alignment between generative reasoning and payer rule shifts: Models will adapt more quickly to evolving coverage criteria so teams can identify specific sections of the note that may conflict with newer payer thresholds.

- Review sequencing that adjusts to case complexity: AI will help route encounters to the right review tier based on the density and ambiguity of the documentation, reducing unnecessary second level audits.

- Expanded longitudinal analysis for chronic conditions: Models will better track subtle disease changes across long time periods so risk based programs can maintain more accurate condition continuity.

Conclusion

Generative AI is giving coding and revenue cycle teams a new layer of visibility into documentation that previously required extensive manual interpretation. The ability to pinpoint subtle clinical details, compare documentation patterns across encounters and highlight gaps before a claim advances gives organisations more control over accuracy and audit readiness. These improvements are most impactful in complex service lines and in risk based programs where documentation consistency influences financial results.

RapidClaims focuses on applying these capabilities in ways that support real reviewer decision making. The platform strengthens how teams interpret clinical narratives, reduces unnecessary clarification cycles and presents reasoning that compliance leaders can validate without slowing workflow.

Request a demo to see how RapidClaims can apply generative AI to the specific documentation and coding challenges within your organisation.

FAQs

Q: How is healthcare generative AI used in real clinical documentation workflows?

A: Healthcare generative AI reviews full encounter narratives and produces structured insights that help coders and auditors understand clinical intent without scanning long notes. It flags unclear sections, highlights relevant clinical evidence and organises information so reviewers can evaluate documentation more efficiently.

Q: Can healthcare generative AI improve accuracy in medical coding and chart review?

A: Yes. It identifies clinically relevant details that influence specificity, surfaces missing documentation elements and presents clear reasoning that supports accurate final coding decisions. These improvements reduce downstream corrections during audit or second level review.

Q: What are the risks of using generative AI in healthcare documentation?

A: The primary risks involve misinterpreting ambiguous text or suggesting conclusions not supported by the clinical record. organisations need oversight rules and reviewer controls to ensure every suggestion is validated before coding decisions are made.

Q: Does healthcare generative AI replace coders or does it support reviewer decisions?

A: It supports coders by presenting organised interpretations, not by making final decisions. Human reviewers still determine code selection, confirm documentation sufficiency and validate that evidence aligns with payer expectations.

Q: What data is required for healthcare generative AI to work effectively?

A: The model needs access to complete encounter notes, relevant structured fields and historical clinical context so it can understand progression and documentation patterns. Clean, consistently formatted EHR exports improve output quality and reduce reviewer corrections.

Q: How does healthcare generative AI maintain compliance with CMS and HIPAA requirements?

A: Compliance relies on maintaining clear audit trails, restricting model access to authorized datasets and ensuring outputs reflect what is actually documented in the note. organisations must validate interpretations regularly so coding and documentation remain aligned with CMS rules and HIPAA privacy standards.

Rejones Patta

Rejones Patta is a knowledgeable medical coder with 4 years of experience in E/M Outpatient and ED Facility coding, committed to accurate charge capture, compliance adherence, and improved reimbursement efficiency at RapidClaims.

Latest Post

expert insights with our carefully curated weekly updates

Related Post

Top Products

%201.png)